I don’t live in my body.

I was 48 years old before I discovered this. Now, such a basic fact, you might think, would be intuitively obvious much earlier. But I’ve only (to my knowledge) been alive this once, and I haven’t had the experience of living as anyone else, so I think I might be forgiven for not fully understanding the extent to which my experience of the world is not everyone’s experience of the world.

Ah, if only we could climb behind someone else’s eyes and feel the world the way they do.

Anyway, I do not live in my body. My perception of my self—my core essence, if you will—is a ball that floats somewhere behind my eyes, and is carried about by my body.

Oh, I feel my body. It relays sensory information to me. I am aware of hot and cold (especially cold; more on that in a bit), soft and hard, rough and smooth. I feel the weight of myself pressing down on my feet. I am aware of the fact that I occupy space, and of my position in space. (Well, at least to some extent. My sense of direction is a bit rubbish, as anyone who’s known me for more than a few months can attest.)

But I don’t live in my body. It’s an apparatus, a biological machine that carries me around. “Me” is the sphere floating just behind my eyes.

And as I said, I didn’t even know this until I was 48.

This is not, as it turns out, my only perceptual anomaly.

I also perceive cold as pain.

I also perceive cold as pain.

When I say this, a lot of folks don’t really understand what I mean. I do not mean that cold is uncomfortable. I mean that cold is painful. An ice cube on my bare skin hurts. A lot. A cold shower is excruciating agony, and I’m not being hyperbolic when I say this. (Being wet is unpleasant under the best of circumstances. Cold water is pure agony. Worse than stubbing a toe, almost on par with touching a hot burner.)

I’ve always more or less assumed that other people perceive cold more or less the same way I do. There’s a trope that cold showers are an antidote to unwanted sexual arousal; I’d always thought that was because the pain shocks you out of any kind of sexy head space. And swimming in ice water? That was something that a certain breed of hard-core masochist did. Some folks like flesh hook suspension; some folks swim in ice water. Same basic thing.

I’ve only recently become aware that there’s actually a medical term for this latter condition: congenital thermal allodynia. It’s an abnormal coding of pain, and it is, I think, related to the not-living-in-my-body thing.

I probably would have discovered all of this if I’d been interested in recreational drug use as a youth. And it appears there may be a common factor in both of these atypical ways I perceive the world.

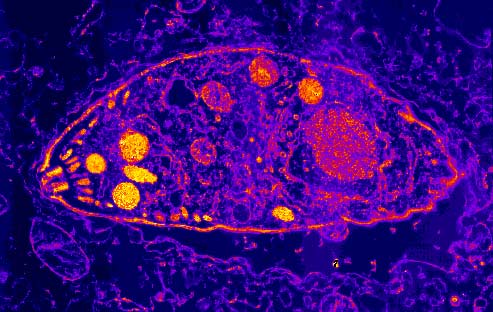

Ladies and gentlebeings, I present to you: TRPA1.

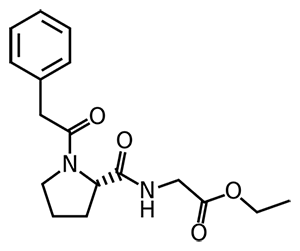

This is TRPA1. It’s a complex protein that acts as a receptor in nerve and other cells. It responds to cold and to the presence of certain chemicals (menthol feels cold because it activates this receptor). Variations on the structure of TRPA1 are implicated in a range of abnormal perception of pain; there’s a single nucleotide polymorphism in the gene that codes for TRPA1, for instance, that results in a medical condition called “hereditary episodic pain syndrome,” whose unfortunate sufferers are wracked by intermittent spasms of agonizing and debilitating pain, often triggered by…cold.

I’ve lived this way my entire life, completely unaware that it’s not the way most folks experience the world. It wasn’t until I started my first tentative explorations down the path of recreational pharmaceuticals that I discovered there was any other way to be.

For nearly all of my life, I’ve never had the slightest interest in recreational drug use, despite what certain of my relatives believed when I was a teenager. Aside from alcohol, I had zero experience with recreational pharmaceuticals until I was in my late 40s.

For nearly all of my life, I’ve never had the slightest interest in recreational drug use, despite what certain of my relatives believed when I was a teenager. Aside from alcohol, I had zero experience with recreational pharmaceuticals until I was in my late 40s.

The first recreational drug I ever tried was psilocybin mushrooms. I’ve had several experiences with them now, which have universally been quite pleasant and agreeable.

But it’s the aftereffects of a mushroom trip that are, for me, the really interesting part.

The second time I tried psilocybin mushrooms, about an hour or so after the comedown from the mushroom trip, I had the sudden and quite marked experience of completely inhabiting my body. For the first time in my entire life, I wasn’t a ball of self being carried around by this complex meat machine; I was living inside my body, head to toe.

The effect of being-in-my-bodyness persisted for a couple of hours after all the other traces of the drug trip had gone, and for a person who’s spent an entire lifetime being carried about by a body but not really being in that body, I gotta say, man, it was amazing.

So I did what I always do: went on Google Scholar and started reading neurobiology papers.

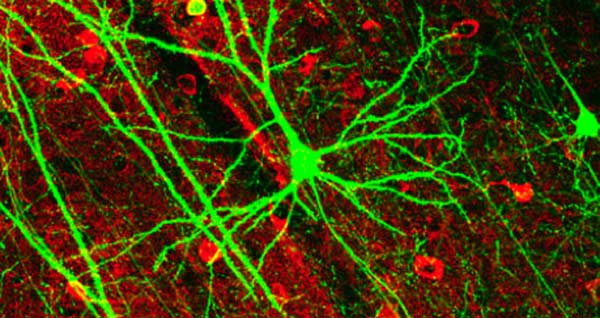

My first hypothesis, born of vaguely remembered classes in neurobiology many years ago and general folk wisdom about psilocybin and other hallucinogens, was that the psilocybin (well, technically, psilocin, a metabolite of psilocybin) acted as a particularly potent serotonin agonist, dramatically increasing brain activity, particularly in the pyramidal cells in layer 5 of the brain. If psilocybin lowered the activation threshold of these cells, reasoned I, then perhaps I became more aware of my body because I was better able to process existing sensory stimulation from the peripheral nervous system, and/or better able to integrate my somatosensory perception. It sounds plausible, right? Right?

Alas, some time on Google Scholar deflated that hypothesis. It turns out that the conventional wisdom about how hallucinogens work is quite likely wrong.

Conventional wisdom is that hallucinogens promote neural activity in cells that express serotonin receptors by mimicking the action of serotonin, causing the cells to fire. Hallucinogens aren’t well understood, but it’s looking like this model is probably not correct.

Oh, don’t get me wrong, psilocybin is a serotonin agonist and it does lower activation threshold of pyramidal cells, oh yes.

The fly in the ointment is that evidence from fMRI and BOLD studies shows an overall inhibition of brain activity resulting from psilocybin. Psilocybin promotes activation of excitatory pyramidal cells, sure, but it also promotes activation of inhibitory GABAergic neurons, resulting in overall decreased activity in several other parts of the brain. Further, this activity in the pyramidal cells produces less overall cohesion of brain activity, as this paper from the Proceedings of the National Academy of Sciences explains. (It’s a really interesting article. Go read it!)

My hypothesis that psilocybin promotes the subjective experience of greater somatosensory integration by lowering activation threshold of pyramidal cells, therefore, seems suspect, unless perhaps we were to further hypothesize that this lowered activation threshold persisted after the mushroom trip was over, an assertion for which I can find no support in the literature.

So lately I’ve been thinking about TRPA1.

I drink a lot of tea. Not as much, perhaps, as my sweetie , but a lot nonetheless.

I drink a lot of tea. Not as much, perhaps, as my sweetie , but a lot nonetheless.

Something I learned a long time ago is that the sensation of being wet is extremely unpleasant, but it’s more tolerable after I’ve had my morning tea. I chalked that down to it being more unpleasant when I was sleepy than when I was awake.

It turns out caffeine is a mild TRPA1 inhibitor. That leads to the hypothesis that for all these years, I may have been self-medicating with caffeine without being aware of it. If TRPA1 is implicated in the more unpleasant somatosensory bits of being me, then caffeine may jam up the gubbins and let me function in a way that’s a closer approximation to the way other folks perceive the world. (Insert witty quip about not being fully human before my morning tea here.)

So then I started to wonder, what if psilocybin is connecting me with my body by influencing TRPA1 activity? Could that explain the aftereffects of a mushroom trip? When I’m in my body, I feel warm and, for lack of a better word, glowy. My sense of self extends downward and outward until it fills up the entire biological machine in which I live. Would TRPA1 inhibition explain that?

Google Scholar offers exactly fuckall on the effects of psilocybin on TRPA1. So I turned to other searches, trying to find other drugs or substances that promoted a subjective experience of greater connection with one’s own body.

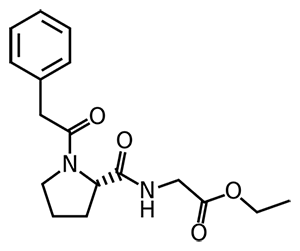

I found anecdotal reports of what I was after from people who used N-phenylacetyl-L-prolylglycine ethyl ester, a supplement developed in Russia and sold as a cognitive enhancer under the Russian name Ноопепт and the English name Noopept. It’s widely sold as a nootropic. New Agers and the fringier elements of the transhumanist movement, two groups I tend not to put a lot of faith in, tout it as a brain booster.

Still, noopept is cheap and easily available, and I figured as long as I was experimenting with my brain’s biochemistry, it was worth a shot.

To hear tell, this stuff will do everything from make you smarter to prevent Alzheimer’s. Real evidence that it does much of anything is thin on the ground, with animal models showing some protective effect against some forms of brain trauma but human trials being generally small and unpersuasive.

I started taking it, and noticed absolutely no difference at all. Still, animal models suggest it takes quite a long time to have maximum effect, so I kept taking it.

About 40 days after I started, I woke up with the feeling of being completely in my body. It didn’t last long, but over the next few weeks, it came and went several times, typically for no more than an hour or two at a time.

But oh, what an hour. When you’ve lived your whole life as a ball being carted around balanced atop a bipedal biological machine, feeling like you inhabit your body is amazing.

The last time it happened, I was in the Adventure Van driving toward the cabin where I am currently writing not one, not two, but three books (a nonfiction followup to More Than Two titled Love More, Be Awesome, and two fiction books set in a common world, called Black Iron and Gold Gold Gold!). We were listening to music, as we often do when we travel, and I…felt the music. In my body.

I’d always more or less assumed that people who talk about “feeling music” were being metaphorical, not literal. Imagine my surprise.

I also noticed something intriguing: Feeling cold will, when I’m in my body, push me right back out again. Hence my hypothesis that not being connected with my body might in some way be related to TRPA1.

The connection with my body, intermittent and tenuous for the past few weeks, has disappeared again. I’m still taking noopept, but I haven’t felt like I’m inhabiting my body for the past couple of weeks. That leads to one of two suppositions: the noopept is not really doing anything at all, which is quite likely, or I’m developing a tolerance for noopept, which seems less likely but I suppose is possible. Noopept is a racetam-like peptide; like members of the racetam class, it is an acetylcholine agonist, and while I can’t find anything in the literature about noopept tolerance, tolerance of other acetylcholine agonists (though not, as near as I can tell, racetam-like acetylcholine agonists) has been observed in animal models.

So there’s that.

The literature on all of this has been decidedly unhelpful. I like the experience of completely inhabiting my body, and would love to find a way to do this all the time.

I’m currently pondering three experiments. First, next time I take mushrooms (and my experience with mushrooms, limited though they are, have universally been incredibly positive; while I have no desire to take them regularly, I probably will take them again at some point in the future), I am planning to set up experiments after the comedown where I expose myself to water and cold sensations to see if the pain is reduced or eliminated in the phase during which I’m connected to my body.

Second, I’m planning to discontinue noopept for a month or so, then resume it to see if the problem is tolerance.

I’m fifty years old and I’m still learning how to be a human being. Life is a remarkable thing.

Like this:

Like Loading...

I also perceive cold as pain.

I also perceive cold as pain.

For nearly all of my life, I’ve never had the slightest interest in recreational drug use, despite what certain of my relatives believed when I was a teenager. Aside from alcohol, I had zero experience with recreational pharmaceuticals until I was in my late 40s.

For nearly all of my life, I’ve never had the slightest interest in recreational drug use, despite what certain of my relatives believed when I was a teenager. Aside from alcohol, I had zero experience with recreational pharmaceuticals until I was in my late 40s.

I drink a lot of tea. Not as much, perhaps, as my sweetie , but a lot nonetheless.

I drink a lot of tea. Not as much, perhaps, as my sweetie , but a lot nonetheless.

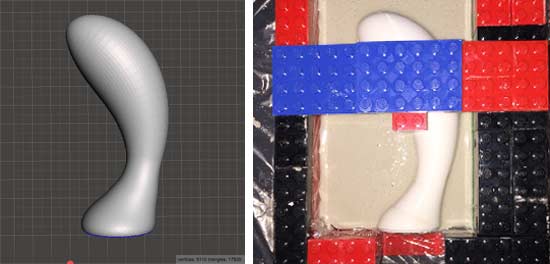

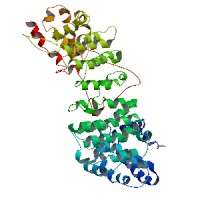

Consider this protein. It’s a model of a protein called AVPR-1a, which is used in brain cells as a receptor for the neurotransmitter called vasopressin.

Consider this protein. It’s a model of a protein called AVPR-1a, which is used in brain cells as a receptor for the neurotransmitter called vasopressin.

Yet she only votes Republican. Why? Because she says she believes, as the Republicans believe, that poor people should just get jobs instead of lazing about watching TV and sucking off hardworking taxpayers’ labor.

Yet she only votes Republican. Why? Because she says she believes, as the Republicans believe, that poor people should just get jobs instead of lazing about watching TV and sucking off hardworking taxpayers’ labor.

This is especially true if the belief has some emotional vibrancy. There is a part of the brain called the amygdala which is, among other things, a kind of “emotional memory center.” That’s a bit oversimplified, but essentially true; when you recall a memory that has an emotional charge, the amygdala mediates your recall of the emotion that goes along with the memory; you feel that emotion again. When you rehearse the reasons you first subscribed to your belief, you re-experience the emotions again–reinforcing it and making it feel more compelling.

This is especially true if the belief has some emotional vibrancy. There is a part of the brain called the amygdala which is, among other things, a kind of “emotional memory center.” That’s a bit oversimplified, but essentially true; when you recall a memory that has an emotional charge, the amygdala mediates your recall of the emotion that goes along with the memory; you feel that emotion again. When you rehearse the reasons you first subscribed to your belief, you re-experience the emotions again–reinforcing it and making it feel more compelling. If you’re afraid of nuclear power, that argument didn’t make a dent in your beliefs. You mentally went back over the reasons you’re afraid of nuclear power, and your amygdala reactivated your fear…which in turn prevented you from seriously considering the idea that nuclear might not be as dangerous as you feel it is.

If you’re afraid of nuclear power, that argument didn’t make a dent in your beliefs. You mentally went back over the reasons you’re afraid of nuclear power, and your amygdala reactivated your fear…which in turn prevented you from seriously considering the idea that nuclear might not be as dangerous as you feel it is.

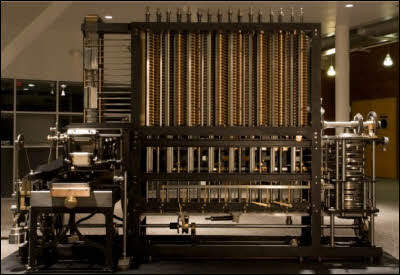

Babbage reasoned–quite accurately–that it was possible to build a machine capable of mathematical computation. He also reasoned that it would be possible to construct such a machine in such a way that it could be fed a program–a sequence of logical steps, each representing some operation to carry out–and that on the conclusion of such a program, the machine would have solved a problem. Ths last bit differentiated his conception of a computational engine from other devices (such as the Antikythera mechanism) which were built to solve one particular problem and could not be programmed.

Babbage reasoned–quite accurately–that it was possible to build a machine capable of mathematical computation. He also reasoned that it would be possible to construct such a machine in such a way that it could be fed a program–a sequence of logical steps, each representing some operation to carry out–and that on the conclusion of such a program, the machine would have solved a problem. Ths last bit differentiated his conception of a computational engine from other devices (such as the Antikythera mechanism) which were built to solve one particular problem and could not be programmed. Now, when I was in school studying neurobiology, things were very simple. You had two kinds of cells in your brain: neurons, which did all the heavy lifting involved in the process of cognition and behavior, and glial cells, which provided support for the neurons, nourished them, repaired damage, and cleaned up the debris from injury or dead cells.

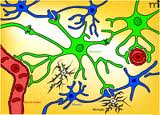

Now, when I was in school studying neurobiology, things were very simple. You had two kinds of cells in your brain: neurons, which did all the heavy lifting involved in the process of cognition and behavior, and glial cells, which provided support for the neurons, nourished them, repaired damage, and cleaned up the debris from injury or dead cells.

Right now, most attempts to model the brain look only at the neurons, and disregard the glial cells. Now, there’s value to this. The brain is really (really really really) complex, and just developing tools able to model billions of cells and hundreds or thousands of billions of interconnections is really, really hard. We’re laying the foundation, even with simple models, that lets us construct the computational and informatics tools for handling a problem of mind-boggling scope.

Right now, most attempts to model the brain look only at the neurons, and disregard the glial cells. Now, there’s value to this. The brain is really (really really really) complex, and just developing tools able to model billions of cells and hundreds or thousands of billions of interconnections is really, really hard. We’re laying the foundation, even with simple models, that lets us construct the computational and informatics tools for handling a problem of mind-boggling scope.