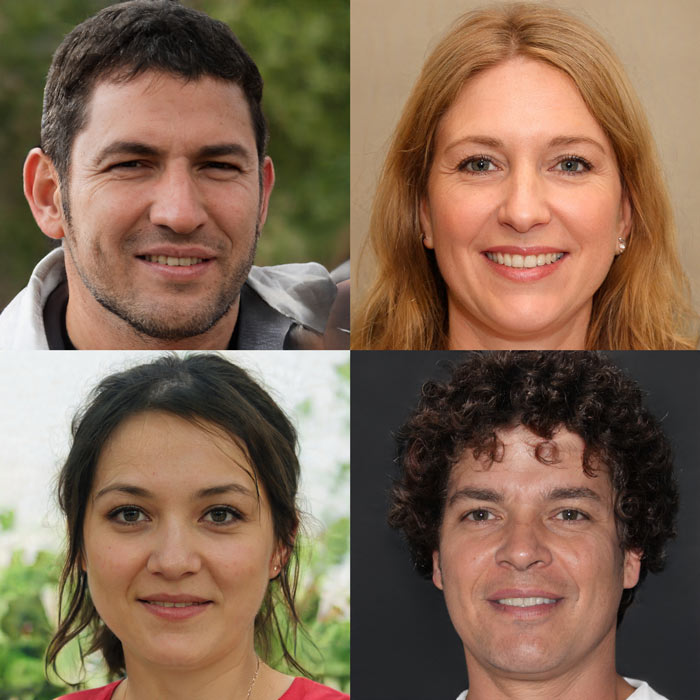

I’ve been thinking a lot about machine learning lately. Take a look at these images:

These people do not exist. They’re generated by a neural net program at thispersondoesnotexist.com, a site that uses Nvidia’s StyleGAN to generate images of faces.

StyleGAN is a generative adversarial network, a neural network that was trained on hundreds of thousands of photos of faces. The network generated images of faces, which were compared with existing photos by another part of the same program (the “adversarial” part). If the matches looked good, those parts of the network were strengthened; if not, they were weakened. And so, over many iterations, its ability to create faces grew.

If you look closely at these faces, there’s something a little…off about them. They don’t look quiiiiite right, especially where clothing is concerned (look at the shoulder of the man in the upper left).

Still, that doesn’t prevent people from using fake images like these for political purposes. The “Hunter Biden story” was “broken” by a “security researcher” who does not exist, using a photo from This Person Does Not Exist, for example.

There are ways you can spot StyleGAN generated faces. For example, the people at This Person Does Not Exist found that the eyes tended to look weird, detached from the faces, so the researchers fixed the problem in a brute-force but clever way: they trained the Style GAN to put the eyes in the same place on every face, regardless of which way it was turned. Faces generated at TPDNE always have the major features in the same place: eyes the same distance apart, nose in the same place, and so on.

StyleGAN can also generate other types of images, as you can see on This Waifu Does Not Exist:

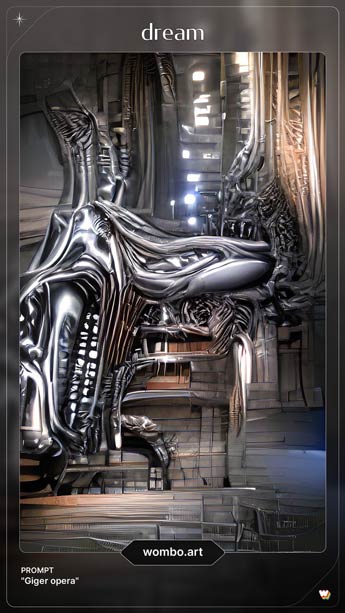

Okay, so what happens if you train a GAN on images that aren’t faces?

That turns out to be a lot harder. The real trick there is tagging the images, so the GAN knows what it’s looking at. That way you can, for example, teach it to give you a building when you ask it for a building, a face when you ask it for a face, and a cat when you ask it for a cat.

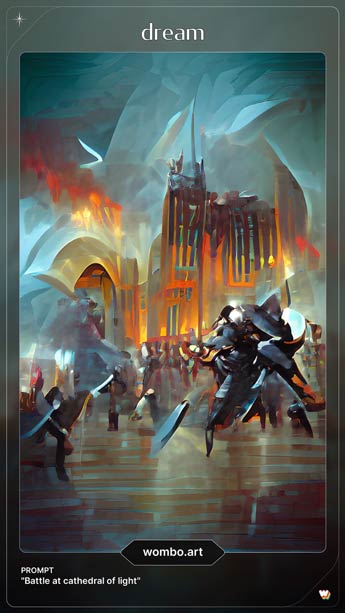

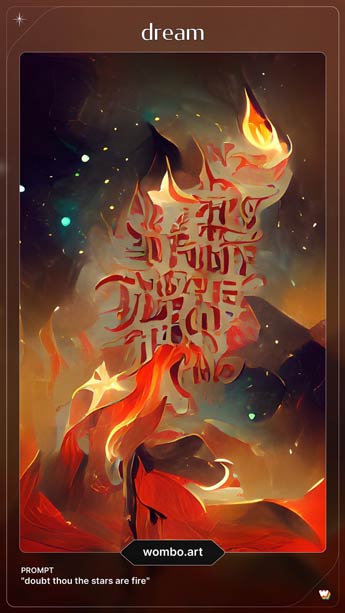

And that’s exactly what the folks at WOMBO have done. The WOMBO Dream app generates random images from any words or phrases you give it.

And I do mean “any” words or phrases.

It can generate cityscapes:

Buildings:

Landscapes:

Scenes:

Body horror:

Abstract ideas:

On and on, endless varieties of images…I can play with it for hours (and I have!).

And believe me when I say it can generate images for anything you can think of. I’ve tried to throw things at it to stump it, and it’s always produced something that looks in some way related to whatever I’ve tossed its way.

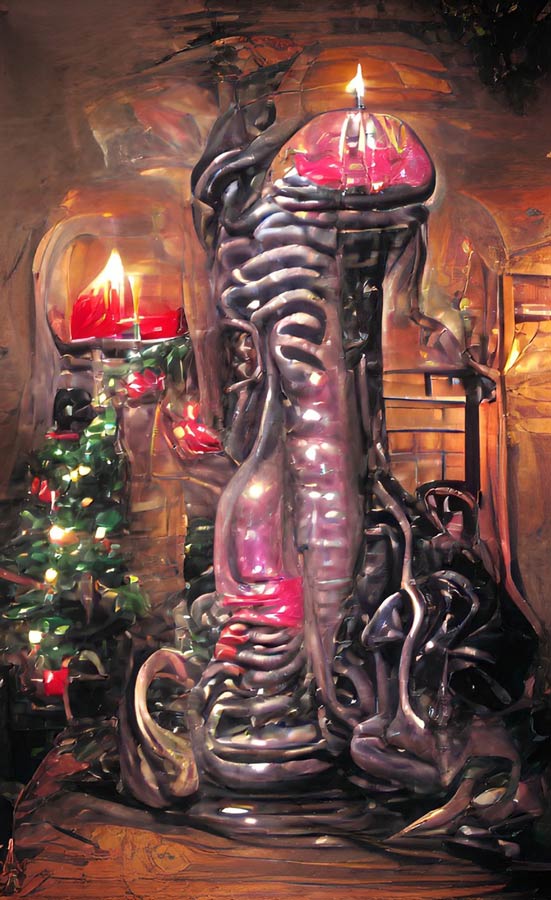

War on Christmas? It’s got you covered:

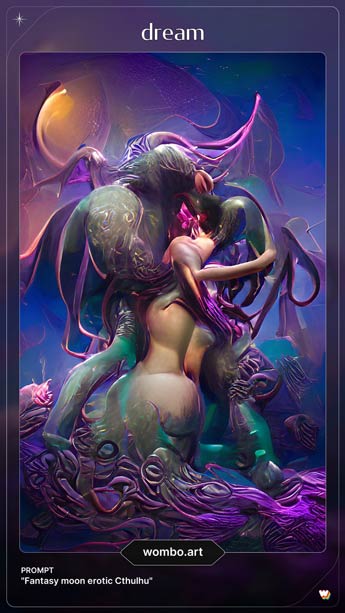

I’ve even tried “Father Christmas encased in Giger sex tentacle:”

Not a bad effort, all things considered.

But here’s the thing:

If you look at these images, they’re all emotionally evocative; they all seem to get the essence of what you’re aiming at, but they lack detail. The parts don’t always fit together right. “Dream” is a good name: the images the GAN produces are hazy, dreamlike, insubstantial, without focus or particular features. The GAN clearly does not understand anything it creates.

And still, if artist twenty years ago had developed this particular style the old-fashioned way, I have no doubt that he or she or they would have become very popular indeed. AI is catching up to human capability in domains we have long thought required some spark of human essence, and doing it scary fast.

I’ve been chewing on what makes WOMBO Dream images so evocative. Is it simply promiscuous pattern recognition? The AI creating novel patterns we’ve never seen before by chewing up and spitting out fragments of things it doesn’t understand, causing us to dig for meaning where there isn’t any?

Given how fast generative machine learning programs are progressing, I am confident I will live to see AI-generated art that is as good as anything a human can do. And yet, I still don’t think the machines that create it will have any understanding of what they’re creating.

I’m not sure how I feel about that.