[Note: There is a followup to this essay here]

Ray Kurzweil pisses me off.

His name came up last night at Science Pub, which is a regular event, hosted by a friend of mine, that brings in guest speakers on a wide range of different science and technology related topics to talk in front of an audience at a large pub. There’s beer and pizza and really smart scientists talking about things they’re really passionate about, and if you live in Portland, Oregon (or Eugene or Hillsboro; my friend is branching out), I can’t recommend them enough.

Before I can talk about why Ray Kurzweil pisses me off–or, more precisely, before I can talk about some of the reasons Ray Kurzweil pisses me off, as an exhaustive list would most surely strain my patience to write and your patience to read–it is first necessary to talk about what I call the “da Vinci effect.”

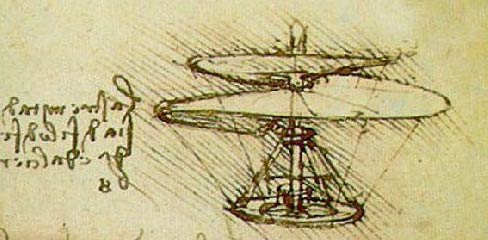

Leonardo da Vinci is, in my opinion, one of the greatest human beings who has ever lived. He embodies the best in our desire to learn; he was interested in painting and sculpture and anatomy and engineering and just about every other thing worth knowing about, and he took time off of creating some of the most incredible works of art the human species has yet created to invent the helicopter, the armored personnel carrier, the barrel spring, the Gatling gun, and the automated artillery fuze…pausing along the way to record innovations in geography, hydraulics, music, and a whole lot of other stuff.

However, most of his inventions, while sound in principle, were crippled by the fact that he could not conceive of any power source other than muscle power. The steam engine was still more than two and a half centuries away; the internal combustion engine, another half-century or so after that.

da Vinci had the ability to anticipate the broad outlines of some really amazing things, but he could not build them, because he lacked one essential element whose design and operation were way beyond him or the society he lived in, both in theory and in practice.

I tend to call this the “da Vinci effect”–the ability to see how something might be possible, but to be missing one key component that’s so far ahead of the technology of the day that it’s not possible even to hypothesize, except perhaps in broad, general terms, how it might work, and not possible even to anticipate with any kind of accuracy how long it might take before the thing becomes reachable.

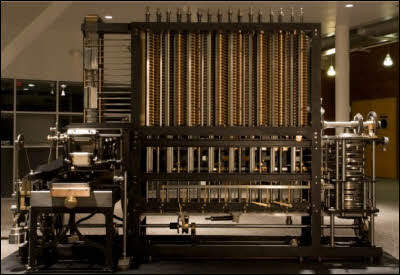

Charles Babbage’s Difference Engine is another example of an idea whose realization was held back by the da Vinci effect.

Babbage reasoned–quite accurately–that it was possible to build a machine capable of mathematical computation. He also reasoned that it would be possible to construct such a machine in such a way that it could be fed a program–a sequence of logical steps, each representing some operation to carry out–and that on the conclusion of such a program, the machine would have solved a problem. Ths last bit differentiated his conception of a computational engine from other devices (such as the Antikythera mechanism) which were built to solve one particular problem and could not be programmed.

Babbage reasoned–quite accurately–that it was possible to build a machine capable of mathematical computation. He also reasoned that it would be possible to construct such a machine in such a way that it could be fed a program–a sequence of logical steps, each representing some operation to carry out–and that on the conclusion of such a program, the machine would have solved a problem. Ths last bit differentiated his conception of a computational engine from other devices (such as the Antikythera mechanism) which were built to solve one particular problem and could not be programmed.

The technology of the time, specifically with respect to precision metal casting, meant his design for a mechanical computer was never realized in his lifetime. Today, we use devices that operate by principles he imagined every day, but they aren’t mechanical; in place of gears and levers, they use gates that control the flow of electrons–something he could never have envisioned given the understanding of his time.

One of the speakers at last night’s Science Pub was Dr. Larry Sherman, a neurobiologist and musician who runs a research lab here in Oregon that’s currently doing a lot of cutting-edge work in neurobiology. He’s one of my heroes1; I’ve seen him present several times now, and he’s a fantastic speaker.

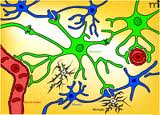

Now, when I was in school studying neurobiology, things were very simple. You had two kinds of cells in your brain: neurons, which did all the heavy lifting involved in the process of cognition and behavior, and glial cells, which provided support for the neurons, nourished them, repaired damage, and cleaned up the debris from injury or dead cells.

Now, when I was in school studying neurobiology, things were very simple. You had two kinds of cells in your brain: neurons, which did all the heavy lifting involved in the process of cognition and behavior, and glial cells, which provided support for the neurons, nourished them, repaired damage, and cleaned up the debris from injury or dead cells.

There are a couple of broad classifications for glial cells: astrocytes and microglia. Astrocytes, shown in green in this picture, provide a physical scaffold to hold neurons (in blue) in place. They wrap the axons of neurons in protective sheaths and they absorb nutrients and oxygen from blood vessels, which they then pass on to the neurons. Microglia are cells that are kind of like little amoebas; hey swim around in your brain locating dead or dying cells, pathogens, and other forms of debris, and eating them.

So that’s the background.

Ray Kurzweil is a self-styled “futurist,” transhumanist, and author. He’s also a Pollyanna with little real rubbber-on-road understanding of the challenges that nanotechnology and biotechnology face. He talks a great deal about AI, human/machine interface, and uploading–the process of modeling a brain in a computer such that the computer is conscious and aware, with all the knowledge and personality of the person being modeled.

He gets a lot of it wrong, but it’s the last bit he gets really wrong. Not the general outlines, mind you, but certainly the timetable. He’s the guy who looks at da Vinci’s notebook and says “Wow, a flying machine? That’s awesome! Look how detailed these drawings are. I bet we could build one of these by next spring!”

Anyway, his name came up during the Q&A at Science Pub, and I kind of groaned. Not as much as I did when Dr. Sherman suggested that a person whose neurons had been replaced with mechanical analogues wouldn’t be a person any more, but I groaned nonetheless.

Afterward, I had a chance to talk to Dr. Sherman briefly. The conversation was short; only just long enough for him to completely blow my mind, make me believe that a lot of ideas about uploading are limited by the da Vinci effect, and to suggest that much brain modeling research currently going on is (in his words) “totally wrong”.

It turns out that most of what I was taught about neurobiology was utterly wrong. Our understanding of the brain has exploded in the last few decades. We’ve learned that people can and do grow new brain cells all the time, throughout their lives. And we’ve learned that the glial cells do a whole lot more than we thought they did.

Astrocytes, long believed to be nothing but scaffolding and cafeteria workers, are strongly implicated in learning and cognition, as it turns out. They not only support the neurons in your brain, but they guide the process of new neural connections, the process by which memory and learning work. They promote the growth of new neural pathways, and they also determine (at least to some degree) how and where those new pathways form.

In fact, human beings have more different types of astrocytes than other vertebrates do. Apparently, according to my brief conversation with Dr. Sherman, researchers have taken human astrocytes and implanted them in developing mice, and discovered an apparent increase in cognitive functions of those mice even though the neurons themselves were no different.

And, more recently, it turns out that microglia–the garbage collectors and scavengers of the brain–can influence high-order behavior as well.

The last bit is really important, and it involves hox genes.

A quick overview of hox genes. These are genes which control the expression of other genes, and which are involved in determining how an organism’s body develops. You (and monkeys and mice and fruit flies and earthworms) have hox genes–pretty much the same hox genes, in fact–that represent an overall “body image plan”. The do things like say “Ah, this bit will become a torso, so I will switch on the genes that correspond to forming arms and legs here, and switch off the genes responsible for making eyeballs or toes.” Or “This bit is the head, so I will switch on the eyeball-forming genes and the mouth-forming genes, and switch off the leg-forming genes.”

Mutations to hox genes generally cause gross physical abnormalities. In fruit flies, incoreect hox gene expression can cause the fly to sprout legs instead of antennae, or to grow wings from strange parts of its body. In humans, hox gene malfunctions can cause a number of really bizarre and usually fatal birth defects–growing tiny limbs out of eye sockets, that sort of thing.

And it appears that a hox gene mutation can result in obsessive-compulsive disorder.

And more bizarrely than that, this hox gene mutation affects the way microglia form.

Think about how bizarre that is for a minute. The genes responsible for regulating overall body plan can cause changes in microglia–little amoeba scavengers that roam around in the brain. And that change to those scavengers can result in gross high-level behavioral differences.

Not only are we not in Kansas any more, we’re not even on the same continent. This is absolutely not what anyone would expect, given our knowledge of the brain even twenty years ago.

Which brings us back ’round to da Vinci.

Right now, most attempts to model the brain look only at the neurons, and disregard the glial cells. Now, there’s value to this. The brain is really (really really really) complex, and just developing tools able to model billions of cells and hundreds or thousands of billions of interconnections is really, really hard. We’re laying the foundation, even with simple models, that lets us construct the computational and informatics tools for handling a problem of mind-boggling scope.

Right now, most attempts to model the brain look only at the neurons, and disregard the glial cells. Now, there’s value to this. The brain is really (really really really) complex, and just developing tools able to model billions of cells and hundreds or thousands of billions of interconnections is really, really hard. We’re laying the foundation, even with simple models, that lets us construct the computational and informatics tools for handling a problem of mind-boggling scope.

But there’s still a critical bit missing. Or critical bits, really. We’re missing the computational bits that would allow us to model a system of this size and scope, or even to be able to map out such a system for the purpose of modeling it. A lot of folks blithely assume Moore’s Law will take care of that for us, but I’m not so sure. Even assuming a computer of infinite power and capability, if you want to upload a person, you still have the task of being able to read the states and connection pathways of many billions of very small cells, and I’m not convinced we even know quite what those tools look like yet.

But on top of that, when you consider that we’re missing a big part of the picture of how cognition happens–we’re looking at only one part of the system, and the mechanism by which glial cells promote, regulate, and influence high-level cognitive tasks is astonishingly poorly understood–it becomes clear (at least to me, anyway) that uploading is something that isn’t going to happen soon.

We can, like da Vinci, sketch out the principles by which it might work. There is nothing in the laws of physics that suggest it can’t be done, and in fact I believe that it absolutely can and will, eventually, be done.

But the more I look at the problem, the more it seems to me that there’s a key bit missing. And I don’t even think we’re in a position yet to figure out what that key bit looks like, much less how it can be built. It may be possible that when we do model brains, the model isn’t going to look anything like what we think of as a conventional computer at all, much like when we built general-purpose programmable devices, they didn’t look like Babbage’s difference engines at all.

1 Or would be, if it weren’t for the fact that he rejects personhood theory, which is something I’m still a bit surprised by. If I ever have the opportunity to talk with him over dinner, I want to discuss personhood theory with him, oh yes.