One of my favorite writers at the moment is Iain M. Banks. Under that name, he writes science fiction set in a post-scarcity society called the Culture, where he deals with political intrigue and moral issues and technology and society on a scale that almost nobody else has ever tried. (In fact, his novel Use of Weapons is my all-time favorite book, and I’ve written about it at great length here.) Under the name Iain Banks, he writes grim and often depressing novels not related to science fiction, and wins lots of awards.

The Culture novels are interesting to me because they are imagination writ large. Conventional science fiction, whether it’s the cyberpunk dystopia of William Gibson or the bland, banal sterility of (God help us) Star Trek, imagines a world that’s quite recognizable to us….or at least to those of us who are white 20th-century Westerners. (It’s always bugged me that the alien races in Star Trek are not really very alien at all; they are more like conventional middle-class white Americans than even, say, Japanese society is, and way less alien than the Serra do Sol tribe of the Amazon basin.) They imagine a future that’s pretty much the same as the present, only more so; “Bones” McCoy, a physician, talks about how death at the ripe old age of 80 is part of Nature’s plan, as he rides around in a spaceship made by welding plates of steel together.

The Culture novels are interesting to me because they are imagination writ large. Conventional science fiction, whether it’s the cyberpunk dystopia of William Gibson or the bland, banal sterility of (God help us) Star Trek, imagines a world that’s quite recognizable to us….or at least to those of us who are white 20th-century Westerners. (It’s always bugged me that the alien races in Star Trek are not really very alien at all; they are more like conventional middle-class white Americans than even, say, Japanese society is, and way less alien than the Serra do Sol tribe of the Amazon basin.) They imagine a future that’s pretty much the same as the present, only more so; “Bones” McCoy, a physician, talks about how death at the ripe old age of 80 is part of Nature’s plan, as he rides around in a spaceship made by welding plates of steel together.

Image from Wikimedia Commons by Hill – Giuseppe Gerbino

In the Culture, by way of contrast, everything is made by atomic-level nanotech assembly processes. Macroengineering exists on a huge scale, so huge that the majority of the Culture’s citizens by far live on orbitals–artificially constructed habitats encircling a star. (One could live on a planet, of course, in much the way that a modern person could live in a cave if she wanted to; but why?) The largest spacecraft, General Systems Vehicles, have populations that range from the tens of millions ot six billion or more. Virtually limitless sources of energy (something I’m panning to blog about later) and virtually unlimited technical ability to make just about anything from raw atoms means that there is no such thing as scarcity; whatever any person needs, that person can have, immediately and for free. And the definition of “person” goes much further, too; whereas in the Star Trek universe, people are still struggling with the idea that a sentient android might be a person, in the Culture, personhood theory (something else about which I plan to write) is the bedrock upon which all other moral and ethical systems are built. Many of the Culture’s citizens are drones or Minds–non-biological computers, of a sort, that range from about as smart as a human to millions of times smarter. Calling them “computers” really is an injustice; it’s about on par with calling a modern supercomputer a string of counting beads. Spacecraft and orbitals are controlled by vast Minds far in advance of unaugmented human intellect.

I had a dream, a while ago, about the Enterprise from Star Trek encountering a General Systems Vehicle, and the hilarity that ensued when they spoke to each other: “Why, hello, Captain Kirk of the Enterprise! I am the GSV Total Internal Reflection of the Culture. You came here in that? How…remarkably courageous of you!”

And speaking of humans…

The biological people in the Culture are the products of advanced technology just as much as the Minds are. They have been altered in many ways; their immune systems are far more resilient, they have much greater conscious control over their bodies; they have almost unlimited life expectancies; they are almost entirely free of disease and aging. Against this backdrop, the stories of the Culture take place.

Banks has written a quick overview of the Culture, and its technological and moral roots, here. A lot of the Culture novels are, in a sense, morality plays; Banks uses the idea of a post-scarcity society to examine everything from bioethics to social structures to moral values.

In the Culture novel, much of the society is depicted as pretty Utopian. Why wouldn’t it be? There’s no scarcity, no starvation, no lack of resources or space. Because of that, there’s little need for conflict; there’s neither land nor resources to fight over. There’s very little need for struggle of any kind; anyone who wants nothing but idle luxury can have it.

For that reason, most of the Culture novels concern themselves with Contact, that part of the Culture which is involved with alien, non-Culture civilizations; and especially with Special Circumstances, that part of Contact whose dealings with other civilizations extends into the realm of covert manipulation, subterfuge, and dirty tricks.

Of which there are many, as the Culture isn’t the only technologically sophisticated player on the scene.

But I wonder…would a post-scarcity society necessarily be Utopian?

But I wonder…would a post-scarcity society necessarily be Utopian?

Banks makes a case, and I think a good one, for the notion that a society’s moral values depend to a great extent on its wealth and the difficulty, or lack thereof, of its existence. Certainly, there are parallels in human history. I have heard it argued, for example, that societies from harsh desert climates produce harsh moral codes, which is why we see commandments in Leviticus detailing at great length and with an almost maniacal glee who to stone, when to stone them, and where to splash their blood after you’ve stoned them. As societies become more civil more wealthy, as every day becomes less of a struggle to survive, those moral values soften. Today, even the most die-hard of evangelical “execute all the gays” Biblical literalist rarely speaks out in favor of stoning women who are not virgins on their wedding night, or executing people for picking up a bundle of sticks on the Sabbath, or dealing with the crime of rape by putting to death both the rapist and the victim.

I’ve even seen it argued that as civilizations become more prosperous, their moral values must become less harsh. In a small nomadic desert tribe, someone who isn’t a team player threatens the lives of the entire tribe. In a large, complex, pluralistic society, someone who is too xenophobic, too zealous in his desire to kill anyone not like himself, threatens the peace, prosperity, and economic competitiveness of the society. The United States might be something of an aberration in this regard, as we are both the wealthiest and also the most totalitarian of the Western countries, but in the overall scope of human history we’re still remarkably progressive. (We are becoming less so, turning more xenophobic and rabidly religious as our economic and military power wane; I’m not sure that the one is directly the cause of the other but those two things definitely seem to be related.)

In the Culture novels, Banks imagines this trend as a straight line going onward; as societies become post-scarcity, they tend to become tolerant, peaceful, and Utopian to an extreme that we would find almost incomprehensible, Special Circumstances aside. There are tiny microsocieties within the Culture that are harsh and murderously intolerant, such as the Eaters in the novel Consider Phlebas, but they are also not post-scarcity; the Eaters have created a tiny society in which they have very little and every day is a struggle for survival.

We don’t have any models of post-scarcity societies to look at, so it’s hard to do anything beyond conjecture. But we do have examples of societies that had little in the way of competition, that had rich resources and no aggressive neighbors to contend with, and had very high standards of living for the time in which they existed that included lots of leisure time and few immediate threats to their survival.

One such society might be the Aztec empire, which spread through the central parts of modern-day Mexico during the 14th century. The Aztecs were technologically sophisticated and built a sprawling empire based on a combination of trade, military might, and tribute.

One such society might be the Aztec empire, which spread through the central parts of modern-day Mexico during the 14th century. The Aztecs were technologically sophisticated and built a sprawling empire based on a combination of trade, military might, and tribute.

Because they required conquered people to pay vast sums of tribute, the Aztecs themselves were wealthy and comfortable. Though they were not industrialized, they lacked for little. Even commoners had what was for the time a high standard of living.

And yet, they were about the furthest thing from Utopian it’s possible to imagine.

The religious traditions of the Aztecs were bloodthirsty in the extreme. So voracious was their appetite for human sacrifices that they would sometimes conquer neighbors just to capture a steady stream of sacrificial victims. Commoners could make money by selling their daughters for sacrifice. Aztec records document tens of thousands of sacrifices just for the dedication of a single temple.

So they wanted for little, had no external threats, had a safe and secure civilization with a stable, thriving economy…and they turned monstrous, with a contempt for human life and a complete disregard for human value that would have made Pol Pot blush. Clearly, complex, secure, stable societies don’t always move toward moral systems that value human life, tolerate diversity, and promote individual dignity and autonomy. In fact, the Aztecs, as they became stronger, more secure, and more stable, seemed to become more bloodthirsty, not less. So why is that? What does that say about hypothetical societies that really are post-scarcity?

One possibility is that where there is no conflict, people feel a need to create it. The Aztecs fought ritual wars, called “flower wars,” with some of their neighbors–wars not over resources or land, but whose purpose was to supply humans for sacrifice.

Now, flower wars might have had a prosaic function not directly connected with religious human sacrifice, of course. Many societies use warfare as a means of disposing of populations of surplus men, who can otherwise lead to social and political unrest. In a civilization that has virtually unlimited space, that’s not a problem; in societies which are geographically bounded, it is. (Even for modern, industrialized nations.)

Still, religion unquestionably played a part. The Aztecs were bloodthirsty at least to some degree because they practiced a bloodthirsty religion, and vice versa. This, I think, indicates that a society’s moral values don’t spring entirely from what is most conducive to that society’s survival. While the things that a society must do in order to survive, and the factors that are most valuable to a society’s functioning at whatever level it finds itself, will affect that society’s religious beliefs (and those beliefs will change to some extent as the needs of the society change), there would seem to be at least some corner of a society’s moral structures that are entirely irrational and completely divorced from what would best serve that society. The Aztecs may be an extreme example of this.

So what does that mean to a post-scarcity society?

It means that a post-scarcity society, even though it has no need of war or conflict, may still have both war and conflict, despite the fact that they serve no rational role. There is no guarantee that a post-scarcity society necessarily must be a rationalist society; while reaching the point of post scarcity does require rationality, at least in the scientific and technological arts, there’s not necessarily any compelling reason to assume that a society that has reached that point must stay rational.

And a post=scarcity society that enshrines irrational beliefs, and has contempt for the value of human life, would be a very scary thing indeed. Imagine a society of limitless wealth and technological prowess that has a morality based on a literalistic interpretation of Leviticus, for instance, in which women really are stoned to death if they aren’t virgins on their wedding night. There wouldn’t necessarily be any compelling reason for a post-scarcity society not to adopt such beliefs; after all, human beings are a renewable resource too, so it would cost the society little to treat its members with indifference.

As much as I love the Culture (and the idea of post-scarcity society in general), I don’t think it’s a given that they would be Utopian.

Perhaps as we continue to advance technologically, we will continue to domesticate ourselves, so that the idea of being pointlessly cruel and warlike would seem quite horrifying to our descendants who reach that point. But if I were asked to make a bet on it, I’m not entirely sure which way I’d bet.

There’s a p value associated with any experiment. For example if someone wanted to show that watching Richard Simmons on television caused birth defects, he might take two groups of pregnant ring-tailed lemurs and put them in front of two different TV sets, one of them showing Richard Simmons reruns and one of them showing reruns of Law & Order, to see if any of the lemurs had pups that were missing legs or had eyes in unlikely places or something.

There’s a p value associated with any experiment. For example if someone wanted to show that watching Richard Simmons on television caused birth defects, he might take two groups of pregnant ring-tailed lemurs and put them in front of two different TV sets, one of them showing Richard Simmons reruns and one of them showing reruns of Law & Order, to see if any of the lemurs had pups that were missing legs or had eyes in unlikely places or something.

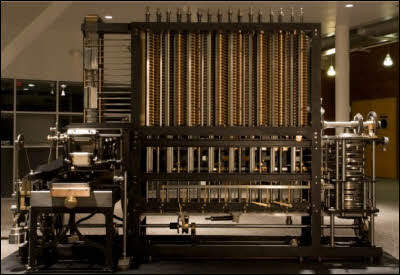

Babbage reasoned–quite accurately–that it was possible to build a machine capable of mathematical computation. He also reasoned that it would be possible to construct such a machine in such a way that it could be fed a program–a sequence of logical steps, each representing some operation to carry out–and that on the conclusion of such a program, the machine would have solved a problem. Ths last bit differentiated his conception of a computational engine from other devices (such as the Antikythera mechanism) which were built to solve one particular problem and could not be programmed.

Babbage reasoned–quite accurately–that it was possible to build a machine capable of mathematical computation. He also reasoned that it would be possible to construct such a machine in such a way that it could be fed a program–a sequence of logical steps, each representing some operation to carry out–and that on the conclusion of such a program, the machine would have solved a problem. Ths last bit differentiated his conception of a computational engine from other devices (such as the Antikythera mechanism) which were built to solve one particular problem and could not be programmed. Now, when I was in school studying neurobiology, things were very simple. You had two kinds of cells in your brain: neurons, which did all the heavy lifting involved in the process of cognition and behavior, and glial cells, which provided support for the neurons, nourished them, repaired damage, and cleaned up the debris from injury or dead cells.

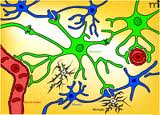

Now, when I was in school studying neurobiology, things were very simple. You had two kinds of cells in your brain: neurons, which did all the heavy lifting involved in the process of cognition and behavior, and glial cells, which provided support for the neurons, nourished them, repaired damage, and cleaned up the debris from injury or dead cells.

Right now, most attempts to model the brain look only at the neurons, and disregard the glial cells. Now, there’s value to this. The brain is really (really really really) complex, and just developing tools able to model billions of cells and hundreds or thousands of billions of interconnections is really, really hard. We’re laying the foundation, even with simple models, that lets us construct the computational and informatics tools for handling a problem of mind-boggling scope.

Right now, most attempts to model the brain look only at the neurons, and disregard the glial cells. Now, there’s value to this. The brain is really (really really really) complex, and just developing tools able to model billions of cells and hundreds or thousands of billions of interconnections is really, really hard. We’re laying the foundation, even with simple models, that lets us construct the computational and informatics tools for handling a problem of mind-boggling scope.